“You don’t build production shit on your machine!” - Yuval Oren

The problem

About a month ago we started getting weird issues with one of our Node.js services in change of extracting data from the database to our analytics platform. After a while, we discovered it did extract only partial data, producing no errors.

We spent long hours trying to catch the problem, which was difficult since we couldn’t recreate it neither on development machines, nor on our test clusters.

Furthermore, the issue would go away for a while after some tweaks and mysteriously come back after a while.

Investigation and finding the root cause

At some point someone in our team had a breakthrough: he suspected that the problem would manifest itself only when we deployed the code to production from one of our senior developer’s machines.

A quick comparison of the Docker images revealed the difference and eventually the cause: the problematic Docker images contained an old version of Node.js v14.5.0 when the current version was v14.16.0.

It appeared that there is some kind of version incompatibility with the mysql2 package that we are using with Node.js versions older than v14.14.0.

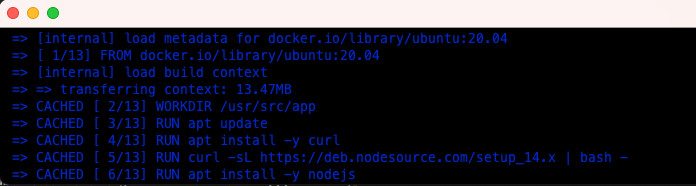

Investigating the Dockerfile revealed the root cause:

We installed the Node.js in Dockerfile using a script from NodeSource binary Node.js distribution.

RUN curl -sL https://deb.nodesource.com/setup_14.x | bash -

Since the code that installs the Node.js is static in Dockerfile, Docker was caching this stage, oblivious of the remote Node.js version changes.

Lessons Learnt

Use CI for building your production code

Even the most basic of CIs would bring you a long way, but firstly to make your builds predictable.

You don’t build production shit on your machine!

Make sure that your builds are predictable

Ensure predictability of your builds.

Remove any dependency from previous state that could affect your builds.

Reduce where possible dependance on external services and sources. Cache them if you can.

Use Docker best practices

Use official images

If you can, use official Node.js Docker images, like node:lts-alpine. Avoid the Debian based distributions, since according to Snyk, these contain several security vulnerabilities.

Use Docker caching

Be aware of Docker caching. Use it for your benefit:

Copy package / dependencies files only before installing them:

COPY package*.json ./

RUN npm install --production && npm cache clean --force

Same for generated files, like thrift:

COPY thrift-src ./thrift-src

RUN npm run generate-thrift

These will use Docker cache and will not trigger costly installations, or code generation unless the relevant files have changed.

Use multi-stage builds

If you require extra packages for the npm install stage, or code generation, use multi-stage builds.

# Install build dependencies

FROM node:lts-buster as base

RUN apt-get update && apt-get install -y \

build-essential \

git \

&& rm -rf /var/lib/apt/lists/*

# Generate production node_modules

FROM base as modules

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install --production && npm cache clean --force

# Install dev-dependencies, generate Thrift artifacts and compile TypeScript

FROM modules as build

WORKDIR /usr/src/app

RUN npm install && npm cache clean --force

COPY thrift-src ./thrift-src

RUN npm run generate-thrift

COPY . .

RUN npm run tsc

# Prepare final image

FROM node:lts-alpine

WORKDIR /usr/src/app

COPY --from=modules /usr/src/app/node_modules /usr/src/app/node_modules

COPY --from=build /usr/src/app/thrift-codegen /usr/src/app/thrift-codegen

COPY --from=build /usr/src/app/js-temp /usr/src/app/js-temp

COPY . .

CMD node js-temp/app.js

Resulting image will be more secure and will take considerably less than disk space.